Using GPT-OSS with Ollama: Run Open-Source AI Models Locally in 2025

- Philip Moses

- Aug 6, 2025

- 3 min read

In 2025, AI development is shifting rapidly toward open-source language models that allow full control and customization—without relying on proprietary tools. One such powerful combo is the GPT-OSS series and Ollama, enabling you to run high-performance LLMs directly on your machine.

In this blog, we'll introduce GPT-OSS, highlight its standout features, and walk you through running it locally using Ollama—step-by-step.

🧠 What is GPT-OSS?

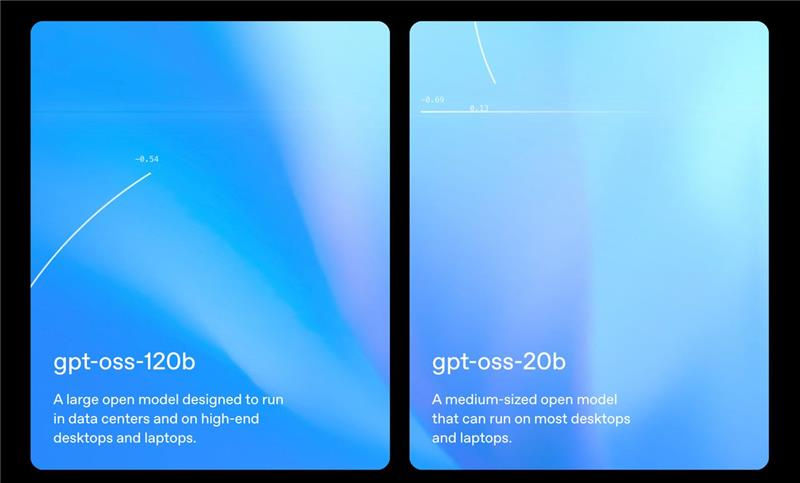

GPT-OSS is a family of open-weight language models—notably gpt-oss-120b and gpt-oss-20b—released under the Apache 2.0 license. These models focus on high performance, tool use, and reasoning while staying affordable and accessible for developers, researchers, and enterprises alike.

🔍 Key Features of GPT-OSS

Superior Reasoning:

gpt-oss-120b rivals OpenAI's o4-mini

gpt-oss-20b is comparable to o3-mini

Efficient Deployment:

120b runs on a single 80 GB GPU

20b needs only 16 GB—ideal for laptops or local servers

Tool Use + Function Calling:

Designed for agent-based workflows with exceptional instruction following and dynamic reasoning abilities

Customizability + CoT Reasoning:

Developers can control output, logic depth, and formatting via Structured Outputs and Chain-of-Thought reasoning

Safety:

Trained and evaluated under OpenAI’s Preparedness Framework, ensuring safety standards rivaling frontier models

⚙️ Architecture & Training

GPT-OSS uses Transformer-based MoE architecture, activating only part of the full model at a time for efficiency:

120b: 5.1B active out of 117B parameters

20b: 3.6B active out of 21B parameters

Advanced features include:

Rotary Positional Embedding (RoPE)

Grouped multi-query attention

Context lengths up to 128k

Trained mainly on English datasets with a focus on STEM, coding, and general knowledge. Aligned using supervised fine-tuning and high-compute RL.

🚀 Running GPT-OSS Locally Using Ollama

Ollama is a tool built for running LLMs locally on your hardware. It simplifies installation, interaction, and deployment—without the need for APIs or internet.

✅ Why Use Ollama?

Fast setup

Privacy-first (no cloud dependency)

No API costs

Optimized for GPU/Apple Silicon

🖥️ System Requirements

Model | Recommended VRAM |

gpt-oss-20b | ≥ 16 GB |

gpt-oss-120b | ≥ 60 GB |

⚠️ CPU fallback is possible but slow.

📦 Quick Setup Guide

1️⃣ Install Ollama

Visit Ollama’s official site and install it for macOS, Windows, or Linux.

2️⃣ Pull the Models

# For gpt-oss-20b

ollama pull gpt-oss:20b

# For gpt-oss-120b

ollama pull gpt-oss:120b3️⃣ Run the Chat Session

ollama run gpt-oss:20bOllama uses an OpenAI-style chat template for familiar interactions.

🛠️ Using Ollama API (Chat Completion)

Ollama supports a Chat Completions-compatible API, making integration smooth for OpenAI developers.

from openai import OpenAI

client=OpenAI(

base_url="http://localhost:11434/v1",

api_key="ollama")

response = client.chat.completions.create(

model="gpt-oss:20b",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Explain what MXFP4 quantization is."}

]

)

print(response.choices[0].message.content)⚡ Function Calling with Ollama

You can continue sending results and reasoning back until a final answer is reached.

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get current weather in a given city",

"parameters": {

"type": "object",

"properties": {"city": {"type": "string"}},

"required": ["city"]

},

},

}

]

response = client.chat.completions.create(

model="gpt-oss:20b",

messages=[{"role": "user", "content": "What's the weather in Berlin right now?"}],

tools=tools

)

print(response.choices[0].message)🧩 GPT-OSS with Agents SDK (Python & TypeScript)

Python Example (via LiteLLM):

import asynciofrom agents import Agent, Runner, function_tool, set_tracing_disabledfrom agents.extensions.models.litellm_model import LitellmModel

set_tracing_disabled(True)

@function_tool def get_weather(city: str):

print(f"[debug] getting weather for {city}")

return f"The weather in {city} is sunny."

async def main(model: str, api_key: str):

agent = Agent(

name="Assistant",

instructions="You only respond in haikus.",

model=LitellmModel(model="ollama/gpt-oss:120b", api_key=api_key),

tools=[get_weather],

)

result = await Runner.run(agent, "What's the weather in Tokyo?")

print(result.final_output)

if __name__ == "__main__":

asyncio.run(main())This lets your AI agent use tools and answer queries with GPT-OSS locally.

🧑💻 TypeScript SDK Integration

For JavaScript/TypeScript developers, Ollama integrates with OpenAI-compatible SDKs, allowing LLM agent creation with ease.

🧾 Conclusion

The GPT-OSS series opens doors to enterprise-grade, reasoning-rich AI that’s fully open-source. By pairing it with Ollama, you can bring that power directly onto your local machine—saving cost, improving privacy, and unlocking full control.

Whether you're exploring LLMs, building intelligent agents, or prototyping next-gen tools, GPT-OSS + Ollama is a combo that delivers.Start building smarter, faster, and locally—today.

Comments